Predictive modeling is a powerful technique in data science that allows us to forecast future outcomes based on historical data. By leveraging statistical algorithms and machine learning, predictive models can identify patterns and relationships in data to make predictions about unknown or future events. This capability has made predictive modeling invaluable across numerous industries, from finance and marketing to healthcare and manufacturing.

As a data scientist with years of experience building predictive models, I’ve seen firsthand how these tools can drive better decision-making and create immense business value. However, getting started with predictive modeling can seem daunting for beginners. That’s why I’ve created this comprehensive guide to walk you through the key concepts and steps involved in building your first predictive model.

In this article, we’ll cover:

- What predictive modeling is and why it’s important

- The main types of predictive modeling techniques

- A step-by-step process for building predictive models

- Best practices and tips for successful modeling

- Real-world examples and applications

- Resources for further learning

By the end, you’ll have a solid foundation to start experimenting with predictive modeling in your own projects. Let’s dive in!

Understanding Predictive Modeling

What is Predictive Modeling?

At its core, predictive modeling is about using data to make informed predictions about future outcomes or behaviors. It involves building mathematical models that can learn patterns from historical data and use those patterns to generate predictions on new data.

For example, a predictive model might:

- Forecast sales for the next quarter based on past sales data and economic indicators

- Estimate the likelihood of a customer churning based on their usage patterns and demographics

- Predict equipment failures in a factory based on sensor readings and maintenance logs

The key is that these models can take in new data points and output predictions, allowing us to make data-driven decisions about future events.

Types of Predictive Modeling Techniques

There are several broad categories of predictive modeling techniques, each suited for different types of problems:

Regression: Used to predict continuous numerical values. Examples include linear regression, polynomial regression, and decision tree regression.

Classification: Used to predict categorical outcomes or class labels. Popular methods include logistic regression, decision trees, random forests, and support vector machines.

Time Series Forecasting: Specialized techniques for making predictions with time-based data, like ARIMA models and Prophet.

Clustering: While not strictly predictive, clustering algorithms like K-means can be used to segment data and inform predictive models.

The choice of technique depends on the specific problem, the type of data available, and the desired outcome. Often, data scientists will experiment with multiple approaches to find the best performing model.

Machine Learning Algorithms in Predictive Modeling

Many predictive modeling techniques leverage machine learning algorithms to improve accuracy and handle complex data relationships. Some key machine learning approaches used in predictive modeling include:

- Linear Models: Simple but powerful for many problems. Examples include linear regression and logistic regression.

- Tree-Based Models: Decision trees, random forests, and gradient boosting machines excel at capturing non-linear relationships.

- Neural Networks: Deep learning models that can handle very complex patterns, especially useful for image and text data.

- Support Vector Machines: Effective for classification tasks, particularly with high-dimensional data.

- Ensemble Methods: Combining multiple models to improve overall performance, like random forests or stacking.

Understanding the strengths and weaknesses of these algorithms is crucial for selecting the right approach for your specific predictive modeling task.

Steps to Build a Predictive Model

Now that we’ve covered the basics, let’s walk through the step-by-step process of building a predictive model. I’ll share insights from my own experiences to help you navigate common challenges.

1. Data Preparation

The foundation of any good predictive model is high-quality, relevant data. Here’s how to get your data ready for modeling:

Data Collection:

Gather historical data that’s relevant to the prediction you want to make. This might involve querying databases, accessing APIs, or even manual data entry. Ensure you have enough data to capture the patterns you’re interested in – I typically aim for at least a few thousand records, but more is usually better.

Data Preprocessing:

Raw data is rarely ready for modeling out of the box. You’ll need to:

- Clean the data by handling missing values, removing duplicates, and correcting errors.

- Format data consistently (e.g., date formats, units of measurement).

- Handle outliers that could skew your model.

In my experience, this step often takes the most time, but it’s crucial for building reliable models.

Feature Selection and Engineering:

Identify the most relevant variables (features) for your prediction task. This might involve:

- Removing redundant or irrelevant features

- Creating new features by combining or transforming existing ones

- Encoding categorical variables

For example, when building a model to predict house prices, I found that creating a “price per square foot” feature significantly improved performance.

Splitting Data:

Divide your dataset into training and test sets. The training set (typically 70-80% of the data) is used to build the model, while the test set is held back to evaluate performance. This split is crucial for assessing how well your model generalizes to new data.

2. Model Selection and Training

With your data prepared, it’s time to choose and train your predictive model:

Choosing an Algorithm:

Select an appropriate algorithm based on your problem type (regression, classification, etc.) and data characteristics. Consider factors like:

- Interpretability requirements

- Training time and computational resources

- Handling of non-linear relationships

For beginners, I often recommend starting with simpler models like linear regression or decision trees before moving on to more complex algorithms.

Training the Model:

Use your chosen algorithm to train the model on the training data. This process involves:

- Initializing the model with default parameters

- Feeding in the training data

- Letting the algorithm adjust its internal parameters to minimize prediction errors

Most machine learning libraries in Python (like scikit-learn) make this process straightforward with just a few lines of code.

3. Model Evaluation

Once your model is trained, it’s crucial to rigorously evaluate its performance:

Evaluation Metrics:

Choose appropriate metrics based on your problem type:

- For regression: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), R-squared

- For classification: Accuracy, Precision, Recall, F1-score, AUC-ROC

I always calculate multiple metrics to get a well-rounded view of model performance.

Cross-Validation:

To get a more robust estimate of performance, use techniques like k-fold cross-validation. This involves training and testing the model on different subsets of the data multiple times.

Assessing Model Performance:

Compare your model’s performance to:

- Baseline models (e.g., always predicting the mean value)

- Business requirements or industry benchmarks

- Your own expectations based on domain knowledge

Remember, a model doesn’t need to be perfect to be useful – it just needs to be better than the current alternative.

4. Model Tuning and Optimization

Most models have room for improvement through careful tuning:

Hyperparameter Tuning:

Adjust the model’s hyperparameters to optimize performance. Techniques include:

- Grid search: Trying all combinations of a predefined set of hyperparameter values

- Random search: Sampling random combinations of hyperparameters

- Bayesian optimization: Using probabilistic models to guide the search

I’ve found that hyperparameter tuning can often boost model performance by 5-10% or more.

Ensemble Methods:

Combine multiple models to improve overall predictions:

- Bagging: Train multiple instances of the same model on different subsets of the data (e.g., Random Forests)

- Boosting: Sequentially train models, with each new model focusing on the errors of the previous ones (e.g., Gradient Boosting Machines)

- Stacking: Use predictions from multiple models as inputs to a final model

Addressing Overfitting and Underfitting:

- Overfitting: When the model performs well on training data but poorly on new data. Address by simplifying the model, adding regularization, or gathering more training data.

- Underfitting: When the model fails to capture important patterns in the data. Address by increasing model complexity or engineering better features.

Balancing model complexity with generalization ability is one of the key challenges in predictive modeling.

5. Model Deployment and Monitoring

The final step is putting your model into action:

Deploying the Model:

Move your model from development to a production environment where it can make real-time predictions. This might involve:

- Containerizing the model with tools like Docker

- Setting up API endpoints for receiving input data and returning predictions

- Integrating the model with existing business systems

Monitoring Performance:

Continuously track your model’s performance in the real world:

- Set up logging to capture prediction inputs and outputs

- Regularly calculate performance metrics on new data

- Watch for concept drift, where the relationships in the data change over time

Updating and Retraining:

Be prepared to update your model as needed:

- Retrain periodically on fresh data to capture new patterns

- Consider implementing automated retraining pipelines for models that need frequent updates

In my experience, the work doesn’t stop once a model is deployed – ongoing monitoring and maintenance are crucial for long-term success.

Embarking on Your Predictive Modeling Journey

Now that we’ve covered the key steps, let’s look at some real-world applications and best practices to guide your predictive modeling efforts.

Real-World Examples and Case Studies

Finance: Predictive models are widely used in the financial sector for tasks like:

- Credit scoring: Estimating the likelihood of loan default

- Fraud detection: Identifying suspicious transactions in real-time

- Algorithmic trading: Making automated investment decisions

For example, I worked on a project using gradient boosting machines to predict credit card fraud, achieving a 20% improvement in detection rates over the previous rule-based system.

Marketing: Companies leverage predictive modeling to optimize their marketing efforts:

- Customer segmentation: Grouping customers with similar behaviors

- Churn prediction: Identifying customers likely to leave

- Recommendation systems: Suggesting relevant products to users

A retail client I worked with used a random forest model to predict customer lifetime value, allowing them to tailor marketing strategies for high-value segments.

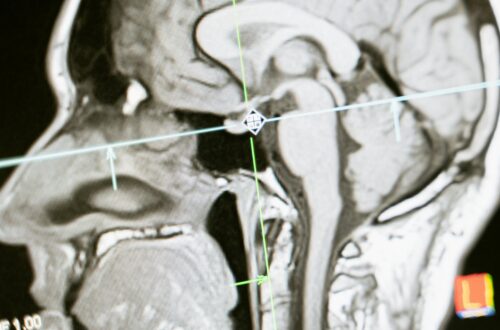

Healthcare: Predictive modeling is revolutionizing patient care:

- Disease risk assessment: Estimating a patient’s likelihood of developing certain conditions

- Treatment outcome prediction: Forecasting the effectiveness of different interventions

- Resource allocation: Optimizing staffing and equipment based on predicted patient volumes

I collaborated on a project using neural networks to predict hospital readmission risk, helping medical staff prioritize follow-up care for high-risk patients.

Best Practices and Tips

Based on my experience, here are some key tips for successful predictive modeling:

- Start simple: Begin with basic models to establish a baseline before moving to more complex approaches.

- Focus on data quality: Invest time in thorough data cleaning and preprocessing – it pays off in model performance.

- Domain knowledge is crucial: Collaborate with subject matter experts to understand the nuances of your data and problem space.

- Interpret your models: Don’t treat models as black boxes – use techniques like SHAP values to understand how they make predictions.

- Validate assumptions: Check that your data meets the assumptions of your chosen modeling technique.

- Plan for production: Consider deployment requirements early in the modeling process.

- Document everything: Keep detailed records of your data sources, preprocessing steps, and modeling decisions.

- Stay ethical: Be aware of potential biases in your data and models, and consider the ethical implications of your predictions.

Resources for Further Learning

To continue developing your predictive modeling skills, I recommend:

- Online courses: Platforms like Coursera and edX offer in-depth machine learning and data science courses.

- Books: “Introduction to Statistical Learning” and “Applied Predictive Modeling” are excellent resources.

- Kaggle competitions: Practice on real-world datasets and learn from the community.

- Open-source libraries: Experiment with scikit-learn, TensorFlow, and PyTorch to build models.

- Blogs and forums: Stay up-to-date with the latest techniques on sites like Towards Data Science and Stack Overflow.

Frequently Asked Questions (FAQ)

Q: How do I know which algorithm to use for my problem?

A: Start by identifying whether you’re dealing with a regression or classification problem. Then, consider factors like data size, feature count, and interpretability requirements. It’s often best to try a few different algorithms and compare their performance.

Q: What’s the difference between supervised and unsupervised learning?

A: Supervised learning involves predicting a target variable using labeled training data. Unsupervised learning, on the other hand, looks for patterns in unlabeled data without a specific target to predict.

Q: How do I handle imbalanced or skewed data?

A: Techniques include oversampling the minority class, undersampling the majority class, or using algorithms that can handle imbalanced data (like weighted random forests). SMOTE (Synthetic Minority Over-sampling Technique) is also a popular approach.

Q: How do I interpret and explain the results of a predictive model?

A: For simpler models like linear regression, you can examine coefficients directly. For more complex models, techniques like SHAP (SHapley Additive exPlanations) values, partial dependence plots, and LIME (Local Interpretable Model-agnostic Explanations) can provide insights into feature importance and decision-making.

Q: What are the common challenges and pitfalls in predictive modeling?

A: Some common issues include:

- Overfitting: Building models that perform well on training data but fail to generalize

- Data leakage: Accidentally including information in the training data that wouldn’t be available when making real predictions

- Ignoring business context: Focusing too much on technical metrics without considering practical application

- Neglecting model maintenance: Failing to monitor and update models in production

By being aware of these challenges, you can take steps to mitigate them in your modeling projects.

Predictive modeling is a powerful tool in the data scientist’s toolkit, enabling us to make informed decisions about the future based on historical data. While the process can be complex, breaking it down into steps – from data preparation to model deployment – makes it more approachable. Remember that building effective predictive models is as much an art as it is a science, requiring creativity, experimentation, and domain knowledge alongside technical skills.

As you embark on your predictive modeling journey, don’t be discouraged by initial setbacks. Every model is an opportunity to learn and improve. With practice and persistence, you’ll be building sophisticated predictive models that drive real-world impact in no time. Happy modeling!